Embark on a journey into the realm of Edge Server Performance Optimization and Latency Reduction Strategies, where we unravel the intricacies of enhancing content delivery efficiency. Dive into the world of edge servers, latency reduction, and performance optimization to discover the key strategies that make a difference.

Introduction to Edge Server Performance Optimization and Latency Reduction Strategies

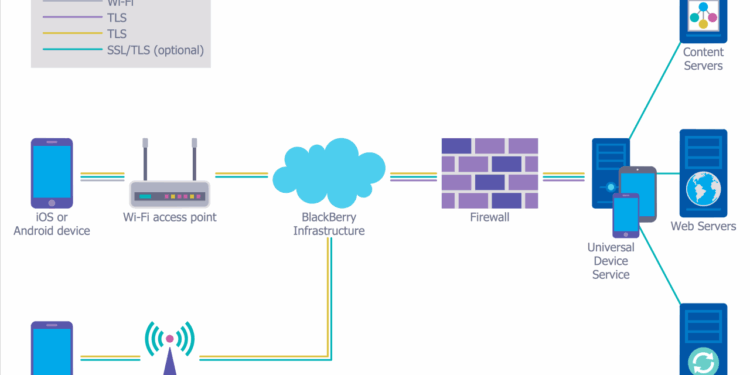

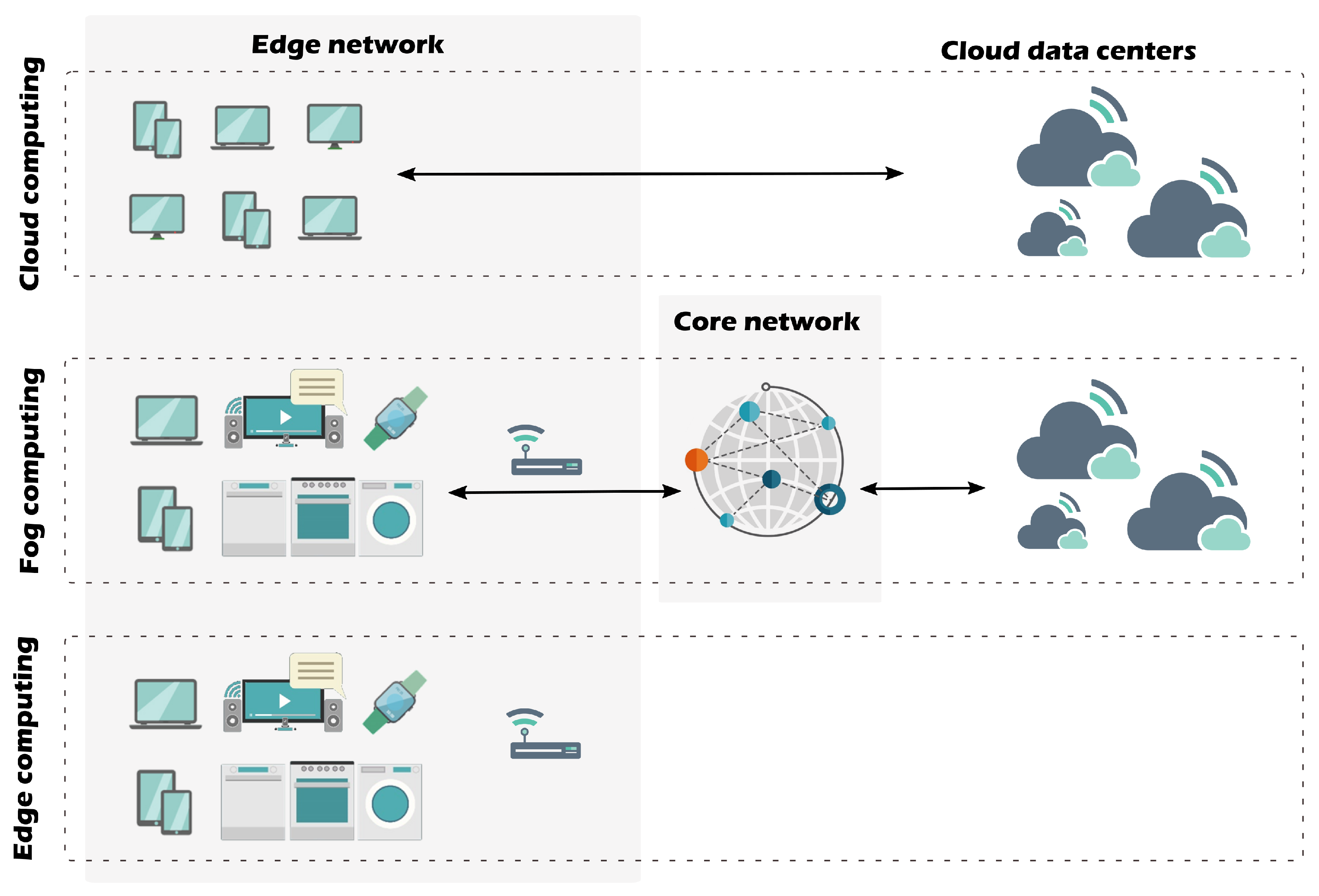

Edge servers play a crucial role in content delivery by acting as intermediary servers between the origin server and the end-user device. They help in caching content closer to the user, reducing the distance data needs to travel and improving load times.

Optimizing edge server performance is essential for reducing latency, which refers to the delay between a user's action and the response from the server. By minimizing latency, websites and applications can provide a faster and more seamless user experience.

Impact of Latency on User Experience

- High latency can lead to slow page loading times, causing frustration for users and potentially driving them away from a website or application.

- Latency can also affect the performance of real-time applications like video streaming, online gaming, and video conferencing, leading to buffering, lag, and disruptions in communication.

- Optimizing edge server performance can help reduce latency, improve responsiveness, and enhance overall user satisfaction with the service.

Edge Server Optimization Techniques

Optimizing edge servers is crucial for improving content delivery speed and reducing latency. Here are some key techniques to enhance performance:

1. Caching Strategies

Using caching strategies can significantly improve content delivery by storing frequently accessed data closer to the end-users. This reduces the need to retrieve information from the origin server, resulting in faster load times and lower latency.

2. Load Balancing

Implementing load balancing techniques helps distribute incoming traffic evenly across multiple servers. This not only prevents server overload but also ensures optimal resource utilization, leading to improved edge server performance and responsiveness.

3. Content Delivery Networks (CDNs)

CDNs play a vital role in reducing latency by caching content on servers located closer to end-users. By serving content from nearby CDN edge servers, the distance data needs to travel is minimized, resulting in faster load times and enhanced user experience.

Network Optimization for Edge Servers

Reducing latency through network configuration is crucial for optimizing edge server performance. By implementing various strategies, such as utilizing proxy servers and route optimization, organizations can significantly improve the overall user experience.

Proxy Servers and Their Impact

Proxy servers act as intermediaries between client devices and edge servers, helping to improve performance by caching frequently accessed data and reducing the number of requests sent to the origin server. This can lead to a decrease in latency and faster response times for end-users.

- Proxy servers can offload some of the processing tasks from edge servers, reducing the workload and improving overall efficiency.

- By caching content at the edge of the network, proxy servers can deliver content more quickly to users, especially for static resources.

- However, improper configuration or an overloaded proxy server can introduce additional latency and hinder performance.

Properly configured proxy servers can play a key role in optimizing edge server performance and reducing latency.

Route Optimization Benefits

Route optimization involves selecting the most efficient path for data to travel between the client device and the edge server. By minimizing the number of network hops and choosing the fastest route, organizations can reduce latency and improve response times.

- Route optimization techniques, such as BGP route optimization and traffic engineering, can help prioritize traffic and ensure that data packets reach their destination in the shortest time possible.

- By optimizing routes, organizations can avoid network congestion, packet loss, and other factors that contribute to increased latency.

- Continuous monitoring and adjustment of routing protocols can further enhance network performance and reduce latency for edge servers.

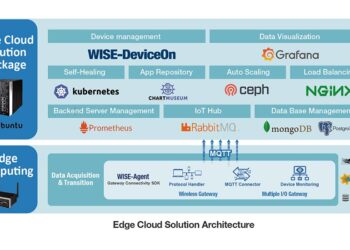

Hardware and Software Considerations

When it comes to optimizing edge server performance and reducing latency, considering both hardware and software aspects is crucial. Let's delve into the importance of hardware acceleration, the role of software-defined networking (SDN), and the impact of microservices architecture on edge server performance.

Hardware Acceleration for Optimal Performance

Hardware acceleration plays a significant role in enhancing the performance of edge servers. By offloading specific tasks to specialized hardware components, such as GPUs or FPGAs, the processing speed and efficiency of edge servers can be greatly improved. This allows for faster data processing, reduced latency, and overall better performance.

Software-Defined Networking (SDN) for Latency Reduction

Software-defined networking (SDN) offers a dynamic and programmable approach to network management, which can help reduce latency in edge server environments. By centralizing network control and automation, SDN enables more efficient routing of data packets, prioritization of traffic, and optimization of network resources.

This leads to lower latency and improved overall performance for edge servers.

Impact of Microservices Architecture

The adoption of microservices architecture can have a significant impact on edge server performance. By breaking down applications into smaller, independent services that can be deployed and scaled individually, microservices offer greater flexibility, scalability, and resilience. This distributed approach to software design can help optimize resource utilization, improve fault tolerance, and enhance the overall performance of edge servers.

Closure

In conclusion, Edge Server Performance Optimization and Latency Reduction Strategies play a vital role in shaping user experiences and overall performance. By implementing the right techniques and considering hardware, software, and network optimizations, you can elevate your content delivery to new heights.

Stay ahead of the curve and maximize the potential of your edge servers for optimal results.